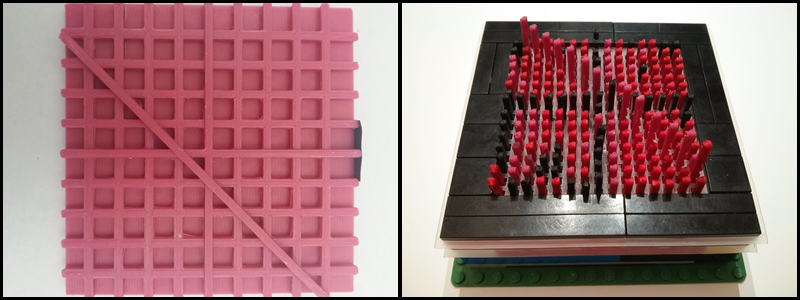

Representation of graph using 3D printed graphic (left) and tactile screen (right)

This project was in collaboration with Megan Hofmann and Jaime Ruiz, where we explored the feasibility of a Do-It-Yourself tactile screen. The goal of this system was to create a device that can convert images into tactile graphics automatically and without the support of a sighted collaborator. The system was primarily constructed using 3D printed materials, custom perforated plastic sheets, and Lego blocks.

We performed a user study conducted compared the tactile screen to 3D printed and embossed graphics. These tactile mediums were select as benchmarks for the quality of the information provided by the tactile screen. Researchers manually controlled the current model of the tactile screen. The study conducted was a Wizard-of-Oz experiment where participants considered the tactile screen as a computer controlled device. From this research, we saw that the tactile screen is a worthwhile alternative to other tactile mediums in displaying information. These results were submitted to ASSETS 2015 and the project is on hiatus.